File Formats#

I present three data formats, feather, parquet and hdf but it exists several more like Apache Avro or Apache ORC.

These data formats may be more appropriate in certain situations. However, the software needed to handle them is either more difficult to install, incomplete, or more difficult to use because less documentation is provided. For ORC and AVRO the python libraries offered are less well maintained than the formats we will see. You can find many on the web but it is hard to know which one is the most stable.

avro and fastavro The following formats are supported by pandas and apache arrow. These softwares are supported by very strong communities.

Feather#

For light data, it is recommanded to use Feather. It is a fast, interoperable data frame storage that comes with bindings for python and R.

Feather uses also the Apache Arrow columnar memory specification to represent binary data on disk. This makes read and write operations very fast.

Parquet file format#

Parquet format is a common binary data store, used particularly in the Hadoop/big-data sphere. It provides several advantages relevant to big-data processing:

The Apache Parquet project provides a standardized open-source columnar storage format for use in data analysis systems. It was created originally for use in Apache Hadoop with systems like Apache Drill, Apache Hive, Apache Impala, and Apache Spark adopting it as a shared standard for high performance data IO.

Hierarchical Data Format#

HDF is a self-describing data format allowing an application to interpret the structure and contents of a file with no outside information. One HDF file can hold a mix of related objects which can be accessed as a group or as individual objects.

Let’s create some big dataframe with consitent data (Floats) and 10% of missing values:

import feather

import pandas as pd

import numpy as np

arr = np.random.randn(500000) # 10% nulls

arr[::10] = np.nan

df = pd.DataFrame({'column_{0}'.format(i): arr for i in range(10)})

%time df.to_csv('test.csv')

%rm test.h5

%time df.to_hdf("test.h5", key="test")

%time df.to_parquet('test.parquet')

%time df.to_feather('test.feather')

%%bash

du -sh test.*

%%time

df = pd.read_csv("test.csv")

len(df)

%%time

df = pd.read_hdf("test.h5")

len(df)

%%time

df = pd.read_parquet("test.parquet")

len(df)

%%time

df = pd.read_feather("test.feather")

len(df)

# Now we create a new big dataframe with a column of strings

import numpy as np

import pandas as pd

from lorem import sentence

words = np.array(sentence().strip().lower().replace(".", " ").split())

# Set the seed so that the numbers can be reproduced.

np.random.seed(0)

n = 1000000

df = pd.DataFrame(np.c_[np.random.randn(n, 5),

np.random.randint(0,10,(n, 2)),

np.random.randint(0,1,(n, 2)),

np.array([np.random.choice(words) for i in range(n)])] ,

columns=list('ABCDEFGHIJ'))

df["A"][::10] = np.nan

len(df)

%%time

df.to_csv('test.csv', index=False)

%%time

df.to_hdf('test.h5', key="test", mode="w")

%%time

df.to_feather('test.feather')

%%time

df.to_parquet('test.parquet')

%%time

df = pd.read_csv("test.csv")

len(df)

%%time

df = pd.read_hdf("test.h5")

len(df)

%%time

df = pd.read_feather('test.feather')

len(df)

%%time

df = pd.read_parquet('test.parquet')

len(df)

df.head(10)

df['J'] = pd.Categorical(df.J)

%time df.to_feather('test.feather')

%time df.to_parquet('test.parquet')

%%time

df = pd.read_feather('test.feather')

len(df)

%%time

df = pd.read_parquet('test.parquet')

len(df)

Feather or Parquet#

Parquet format is designed for long-term storage, where Arrow is more intended for short term or ephemeral storage because files volume are larger.

Parquet is usually more expensive to write than Feather as it features more layers of encoding and compression.

Feather is unmodified raw columnar Arrow memory. We will probably add simple compression to Feather in the future.

Due to dictionary encoding, RLE encoding, and data page compression, Parquet files will often be much smaller than Feather files

Parquet is a standard storage format for analytics that’s supported by Spark. So if you are doing analytics, Parquet is a good option as a reference storage format for query by multiple systems

Apache Arrow#

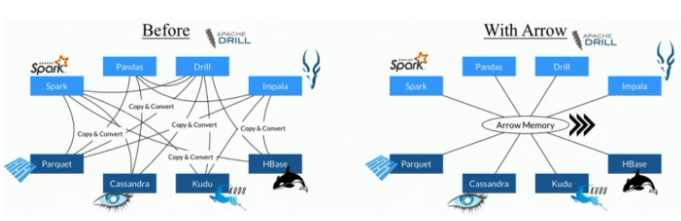

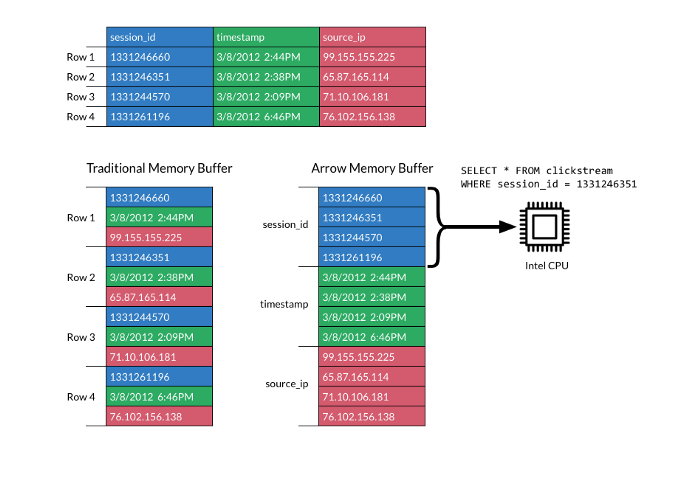

Arrow is a columnar in-memory analytics layer designed to accelerate big data. It houses a set of canonical in-memory representations of hierarchical data along with multiple language-bindings for structure manipulation. Arrow offers an unified way to be able to share the same data representation among languages and it will certainly be the next standard to store dataframes in all languages.

Apache Arrow is an ideal in-memory transport layer for data that is being read or written with Parquet files. PyArrow includes Python bindings to read and write Parquet files with pandas.

columnar storage, only read the data of interest

efficient binary packing

choice of compression algorithms and encoding

split data into files, allowing for parallel processing

range of logical types

statistics stored in metadata allow for skipping unneeded chunks

data partitioning using the directory structure

Example:

import pyarrow as pa

import pyarrow.parquet as pq

import pandas as pd

import numpy as np

arr = np.random.randn(500000) # 10% nulls

arr[::10] = np.nan

df = pd.DataFrame({'column_{0}'.format(i): arr for i in range(10)})

hdfs = pa.hdfs.connect()

table = pa.Table.from_pandas(df)

pq.write_to_dataset(table, root_path="test", filesystem=hdfs)

hdfs.ls("test")

Read CSV from HDFS#

Put the file test.csv on hdfs system

from pyarrow import csv

with hdfs.open("/data/nycflights/1999.csv", "rb") as f:

df = pd.read_csv(f, nrows = 10)

print(df.head())

Read Parquet File from HDFS with pandas#

import pandas as pd

wikipedia = pd.read_parquet("hdfs://svmass2.mass.uhb.fr:54310/data/pagecounts-parquet/part-00007-8575060f-6b57-45ea-bf1d-cd77b6141f70.snappy.parquet", engine=’pyarrow’)

print(wikipedia.head())

Read Parquet File with pyarrow#

table = pq.read_table("example.parquet")

Writing a parquet file from Apache Arrow#

pq.write_table(table, "example.parquet")

Check metadata#

parquet_file = pq.ParquetFile("example.parquet")

print(parquet_file.metadata)

See schema#

print(parquet_file.schema)

Connect to the Hadoop file system#

hdfs = pa.hdfs.connect()

# copy to local

with hdfs.open("user.txt", "rb") as f:

f.download("user.text")

# write parquet file on hdfs

with open("example.parquet", "rb") as f:

pa.HadoopFileSystem.upload(hdfs, "example.parquet", f)

# List files

for f in hdfs.ls("/user/navaro_p"):

print(f)

# create a small dataframe and write it to hadoop file system

small_df = pd.DataFrame(np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]]), columns=['a', 'b', 'c'])

table = pa.Table.from_pandas(small_df)

pq.write_table(table, "small_df.parquet", filesystem=hdfs)

# Read files from Hadoop with pandas

with hdfs.open("/data/irmar.csv") as f:

df = pd.read_csv(f)

print(df.head())

# Read parquet file from Hadoop with pandas

server = "hdfs://svmass2.mass.uhb.fr:54310"

path = "data/pagecounts-parquet/part-00007-8575060f-6b57-45ea-bf1d-cd77b6141f70.snappy.parquet"

pagecount = pd.read_parquet(os.path.join(server, path), engine="pyarrow")

print(pagecount.head())

# Read parquet file from Hadoop with pyarrow

table = pq.read_table(os.path.join(server,path))

print(table.schema)

df = table.to_pandas()

print(df.head())

Exercise#

Take the second dataframe with string as last column

Create an arrow table from pandas dataframe

Write the file test.parquet from arrow table

Print metadata from this parquet file

Print schema

Upload the file to hadoop file system

Read this file from hadoop file system and print dataframe head

Hint: check the doc https://arrow.apache.org/docs/python/parquet.html